On Classroom Observations

As STEM education matures, the field will profit from tools that support teacher growth and that support rich instruction. A central design issue concerns domain specificity. Can generic classroom observation tools suffice, or will the field need tools tailored to STEM content and processes? If the latter, how much will specifics matter? This article begins by proposing desiderata for frameworks and rubrics used for observations of classroom practice. It then addresses questions of domain specificity by focusing on the similarities, differences, and affordances of three observational frameworks widely used in mathematics classrooms: Framework for Teaching, Mathematical Quality of Instruction, and Teaching for Robust Understanding. It describes the ways that each framework assesses selected instances of mathematics instruction, documenting the ways in which the three frameworks agree and differ. Specifically, these widely used frameworks disagree on what counts as high quality instruction: questions of whether a framework valorizes orderly classrooms or the messiness that often accompanies inquiry, and which aspects of disciplinary thinking are credited, are consequential. This observation has significant implications for tool choice, given that these and other observation tools are widely used for professional development and for teacher evaluations.

This is a preview of subscription content, log in via an institution to check access.

Access this article

Subscribe and save

Springer+ Basic

€32.70 /Month

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

Buy Now

Price includes VAT (France)

Instant access to the full article PDF.

Rent this article via DeepDyve

Similar content being viewed by others

Classroom observation frameworks for studying instructional quality: looking back and looking forward

Article 26 May 2018

Using the UTeach Observation Protocol (UTOP) to understand the quality of mathematics instruction

Article 12 March 2018

Possible biases in observation systems when applied across contexts: conceptualizing, operationalizing, and sequencing instructional quality

Article Open access 02 July 2022

Explore related subjects

Notes

To be sure, teacher educators and professional developers hold tacit theories of proficiency, which shape their emphases in teacher preparation and professional development. The question is the degree to which such ideas are explicit, grounded in the literature, and empirically assessed.

The authoring team consists of members of the TRU team. We have done our best to provide enough evidence to allow readers to come to their own judgments about possible issues of bias.

Matters of pedagogy and content are intertwined. For example, a “demonstrate and practice” form of pedagogy may inhibit certain kinds of inquiry that are highly valued in STEM. Thus a rubric that assigns high value to such pedagogy may downgrade classrooms in which there are somewhat unstructured exploratory investigations. The question is how much disciplinary scores matter in assigning the overall score to an episode of instruction, and whether a more fine-grained examination of disciplinary practices reveals things not reflected in a general rubric.

The authors will gladly send interested readers our analysis of Video B, which is written up in detail comparable to the write-ups for Videos A and C.

In accord with our permission to examine the videos from the MET database, we have done everything we can to honor the confidentiality of the research process and to remove possible identifiers of the individuals, cities and schools involved.

The other video with comparably high FfT scores fared similarly less well on the MQI scale, so our choice for exposition does not represent an anomalous example.

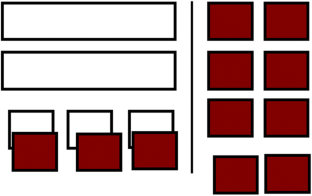

These represent “2x + 3 = − 5” (Fig. 1) and “- 5 = 3x - 2” (Fig. 2) respectively, although the equations are not yet written. They will appear later in the lesson.

There are also dark rectangles representing (− x). They cancel out the light rectangles.

We did catch some slips on the part of the teacher, though not enough for us to downgrade the lesson that much. We note that of the 11 videos in our sample of “very high or very low” scores, 9 received MQI scores of 1 for errors and imprecisions, so the MQI scoring on that component of the rubric was very stringent.

This is parallel to the issue of assessing student understanding. A test of STEM content can focus on things that are superficial, or on real sense-making. The same is the case for the assessment of classroom environments.

References

- Beeby, T., Burkhardt, H., & Caddy, R. (1980). SCAN: Systematic classroom analysis notation for mathematics lessons. Nottingham: Shell Centre for Mathematics Education. Google Scholar

- Borko, H., Eisenhart, M., Brown, C., Underhill, R., Jones, D., & Agard, P. (1992). Learning to teach hard mathematics: Do novice teachers and their instructors give up too easily? Journal for Research in Mathematics Education, 23(3), 194–222. ArticleGoogle Scholar

- Boston, M., Bostic, J., Lesseig, K., & Sherman, M. (2015). A comparison of mathematics classroom protocols. Mathematics Teacher Educator, 3(2), 154–175. ArticleGoogle Scholar

- California Department of Education (2018). Science, Technology, Engineering, & Mathematics (STEM) information. Accessed April 2, 2018 from https://www.cde.ca.gov/pd/ca/sc/stemintrod.asp.

- Cohen, D., Raudenbush, S., & Ball, D. (2003). Resources, instruction, and research. Educational Evaluation and Policy Analysis, 25(2), 1–24. ArticleGoogle Scholar

- Common Core State Standards Initiative (2010). Common Core State Standards for Mathematics. Downloaded June 4, 2010 from http://www.corestandards.org/the-standards.

- Danielson, C. (2011). The Framework for Teaching evaluation instrument, 2011 Edition. Downloaded April 1, 2012, from http://www.danielsongroup.org/article.aspx?page=FfTEvaluationInstrument.

- Danielson, C. (August 14, 2015). Personal communication.

- Danielson Group. (2015). The Framework. Downloaded July 6, 2015, from https://danielsongroup.org/framework/.

- Gitomer, D., & Bell, C. (Eds.). (2016). Handbook of research on teaching (5th ed.). Washington, DC: American Educational Research Association. Google Scholar

- Glaser, B., & Strauss, A. (1967). The discovery of grounded theory: Strategies for qualitative research. Chicago: Aldine. Google Scholar

- Hill, H., Charalambous, C., & Kraft, M. (2012). When rater reliability is not enough: Teacher observation systems and a case for their generalizability. Educational Researcher, 41(2), 56–64. https://doi.org/10.3102/0013189X12437203. ArticleGoogle Scholar

- Junker, B., Matsumura, L. C., Crosson, A., Wolf, M. K., Levison, A., Weisberg, Y., & Resnick, L. (2004). Overview of the instructional quality assessment. San Diego: Paper presented at the annual meeting of the American Educational Research Association. Google Scholar

- Kane, M. T. (1992). An argument-based approach to validity. Psychological Bulletin, 112(3), 527–535. ArticleGoogle Scholar

- Kane, M. T. (2013). Validating the interpretations and uses of test scores. Journal of Educational Measurement, 50(1), 1–73. ArticleGoogle Scholar

- Learning Mathematics for Teaching Project. (2011). Measuring the mathematical quality of instruction. Journal of Mathematics Teacher Education, 14, 25–47. https://doi.org/10.1007/s10857-010-9140-1. ArticleGoogle Scholar

- Ma, L. (1999). Knowing and teaching elementary mathematics. Mahwah: Erlbaum. Google Scholar

- Marder, M., & Walkington, C. (2012) UTeach Teacher Observation Protocol. Downloaded April 1, 2012, from https://wikis.utexas.edu/pages/viewpageattachments.action?pageId=6884866&sortBy=date&highlight=UTOP_Physics_2009.doc.&.

- Measures of Effective Teaching Longitudinal Database. (2016). http://www.icpsr.umich.edu/icpsrweb/METLDB/. “Instruments,” http://www.icpsr.umich.edu/icpsrweb/content/METLDB/grants/instruments.html. Accessed January 1, 2016.

- Measures of Effective Teaching Project (2010). The MQI protocol for classroom observations. Retrieved on July 4, 2012, from http://metproject.org/resources/MQI_10_29_10.pdf

- Measures of Effective Teaching Project (2012). Gathering feedback for teaching. Retrieved on July 4, 2012, from the Bill and Melinda Gates Foundation website: http://metproject.org/downloads/MET_Gathering_Feedback_Research_Paper.pdf

- Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: American Council on Education and Macmillan. Google Scholar

- National Research Council. (2001). Adding it up: Helping children learn mathematics. Washington DC: National Academy Press. Google Scholar

- PACT Consortium (2012) Performance Assessment for California Teachers. (2012) A brief overview of the PACT assessment system. Downloaded April 1, 2012, from http://www.pacttpa.org/_main/hub.php?pageName=Home.

- Partnership for Assessment of Readiness for College and Careers. About PARCC. (2014) Downloaded April 1, 2014 from http://www.parcconline.org/about.

- Pianta, R., La Paro, K., & Hamre, B. K. (2008). Classroom assessment scoring system. Baltimore: Paul H. Brookes. Google Scholar

- Schoenfeld, A. H. (2013). Classroom observations in theory and practice. ZDM, the International Journal of Mathematics Education, 45, 607–621. https://doi.org/10.1007/s11858-012-0483-1. ArticleGoogle Scholar

- Schoenfeld, A. H. (2014). What makes for powerful classrooms, and how can we support teachers in creating them? Educational Researcher, 43(8), 404–412. https://doi.org/10.3102/0013189X1455. ArticleGoogle Scholar

- Schoenfeld, A. H. (July 9, 2016). Personal communication.

- Schoenfeld, A. H. (2017). Uses of video in understanding and improving mathematical thinking and teaching. Journal of Mathematics Teacher Education, 20(5), 415–432. https://doi.org/10.1007/s10857-017-9381-3. ArticleGoogle Scholar

- Schoenfeld, A. H. (2018). Video analyses for research and professional development: The teaching for robust understanding (TRU) framework. In C. Y. Charalambous & A.-K. Praetorius (Eds.), Studying instructional quality in mathematics through different lenses: In search of common ground. An issue of ZDM: Mathematics Education. Manuscript available at https://doi.org/10.1007/s11858-017-0908-y.

- Schoenfeld, A. H., & Kilpatrick, J. (2008). Toward a theory of proficiency in teaching mathematics. In D. Tirosh & T. Wood (Eds.), International handbook of mathematics teacher education, volume 2: Tools and processes in mathematics teacher education (pp. 321–354). Rotterdam: Sense Publishers. Google Scholar

- Schoenfeld, A. H., Floden, R. E., & The Algebra Teaching Study and Mathematics Assessment Project. (2014). The TRU Math Scoring Rubric. Berkeley, CA & E. Lansing, MI: Graduate School of Education, University of California, Berkeley & College of Education, Michigan State University. Retrieved from http://ats.berkeley.edu/tools.html and http://map.mathshell.org/trumath.php.

- Schoenfeld, A. H., Floden, R. E., & The algebra teaching study and mathematics assessment project. (2015). TRU Math Scoring Guide Version Alpha. Berkeley, CA & E. Lansing, MI: Graduate School of Education, University of California, Berkeley & College of Education, Michigan State University. Retrieved from http://ats.berkeley.edu/tools.html and http://map.mathshell.org/trumath.php.

- Smarter Balanced Assessment Consortium. (2014) Home. Downloaded April 1, 2014, from http://www.smarterbalanced.org/.

- Smarter Balanced Assessment Consortium. (2015) Content specifications for the summative assessment of the common core state standards for mathematics (revised draft, July 2015). Downloaded August 1, 2015, from http://www.smarterbalanced.org/wordpress/wp-content/uploads/2011/12/Mathematics-Content-Specifications_July-2015.pdf

- St. John, M. (2007). Investing in an improvement infrastructure. Downloaded April 1, 2011, from http://nmpmse.pbworks.com/f/building+an+infrastructure.pdf

- Stigler, J, & Hiebert, J. (1999). The teaching gap. New York: Free Press.

- Thompson, P., & Thompson, A. (1994). Talking about rates conceptually, part I: A teacher's struggle. Journal for Research in Mathematics Education, 25(3), 279–303. ArticleGoogle Scholar

- University of Michigan. (2006). Learning mathematics for teaching. A coding rubric for measuring the mathematical quality of instruction (Technical Report LMT1.06). Ann Arbor: University of Michigan, School of Education. Google Scholar

Acknowledgments

The authors gratefully acknowledge support for this work from The Algebra Teaching Study (NSF Grant DRL-0909815 to PI Alan Schoenfeld, U.C. Berkeley, and NSF Grant DRL-0909851 to PI Robert Floden, Michigan State University), and of The Mathematics Assessment Project (Bill and Melinda Gates Foundation Grants OPP53342 PIs Alan Schoenfeld, U. C Berkeley, and Hugh Burkhardt and Malcolm Swan, The University of Nottingham). They are grateful for the ongoing collaborations and support from members of the Algebra Teaching Study and Mathematics Assessment Project teams.

Author information

Authors and Affiliations

- Education, EMST, M.C. 1670, University of California, Berkeley, 2121 Berkeley Way, Berkeley, CA, 94720-1670, USA Alan H. Schoenfeld, Fady El Chidiac, Dennis Gillingham, Heather Fink, Alyssa Sayavedra, Anna Weltman & Anna Zarkh

- College of Education, Erickson Hall, Michigan State University, East Lansing, MI, 48824-1034, USA Robert Floden

- Northwestern University School of Education & Social Policy, Walter Annenberg Hall, Northwestern University, 2120 Campus Drive, Evanston, IL, 60208, USA Sihua Hu

- Alan H. Schoenfeld